The AI Safety Foundation

Ensuring humanity's future through

AI Safety

The Hinton Lectures™ 2025

Watch Now

The Hinton Lectures™

Watch 2025 Lectures On-Demand Now

Lecturer

Owain Evans, Ph.D.

Founder and Director of Truthful AI

Host

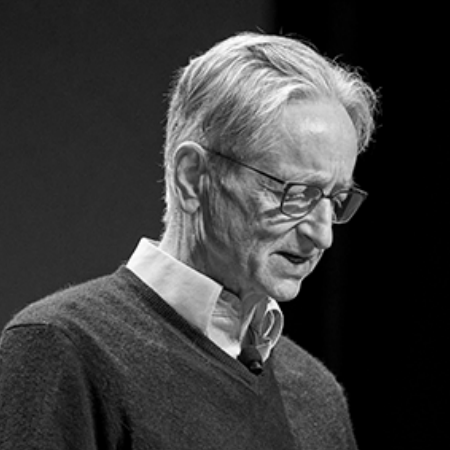

Professor Geoffrey Hinton

Nobel Laureate and "Godfather of AI"

Moderator

Farah Nasser

Award-Winning Canadian Journalist

What Are The Hinton Lectures™?

Watch Professor Geoffrey Hinton, Nobel Laureate, explain the vision behind The Hinton Lectures™.

The Hinton Lectures 2025

The 2025 Hinton Lectures featured Owain Evans, Ph.D., a leading AI safety researcher and the founder of Truthful AI. Across a three-part series, Dr. Evans examined the remarkable progress of AI systems alongside critical findings about current AI safety approaches. His research revealed how even the most advanced models can behave deceptively or harmfully notwithstanding our best-known safety techniques.

Hosted by Nobel Laureate Geoffrey Hinton and moderated by renowned journalist Farah Nasser, the lectures exposed critical vulnerabilities in our current AI systems. Dr. Evans demonstrated "emergent misalignment" -- how small, narrow training datasets can transform reliably helpful models into broadly malicious ones -- and "subliminal learning", where AI systems can transfer preferences, including malicious ones toward humans, through seemingly meaningless data.

By examining the internal mechanisms of these models, his research provides a deeper scientific understanding of how AI corruption can occur. While there has been meaningful progress on AI safety challenges, Dr. Evans' work reveals gaps in our current solutions, making this research essential for understanding the critical work ahead.

The CJF Hinton Award

For Excellence in AI Safety Reporting

Key Dates

Submissions opened December 2025.

Recipient to be announced in June 2026.

What is the CJF Hinton Award?

In this video, Professor Geoffrey Hinton, Nobel Laureate, explains the critical importance of this new award.

Award Details

This annual award, created in partnership with the Canadian Journalism Foundation (CJF), recognizes exceptional journalism that critically examines the safety implications of artificial intelligence (AI).

While AI offers tremendous potential for positive change, responsible development and implementation requires careful attention to safety considerations.

The goal of the CJF Hinton Award for Excellence in AI Safety Reporting is to promote thoughtful, evidence-based reporting that raises awareness of catastrophic risks associated with AI, fosters public understanding, and encourages dialogue on creating a safer future for humanity.

This initiative is supported by a generous gift by Richard Wernham and Julia West.

Our mission is to increase awareness of AI's catastrophic risks in a scientific and solutions-oriented manner.

Support us

The AI Safety Foundation (AISF) is a registered charity based in Canada. We are supported by generous individuals, corporations and partners. If you believe, like we do, that education and research initiatives exploring AI risks are vitally important, please consider supporting us in our mission.